MODEL-BASED TESTING

Pattern Summary

Derive test cases from a model of the Software Under Test (SUT), typically using an automated test case generator.

Category

Design

Context

The main advantages of model-based testing (MBT) are the systematic test coverage of the model and a significant reduction of the test maintenance effort. In case of changes to the SUT, only a few parts of the model have to be updated, while the test cases are updated automatically by the generator.

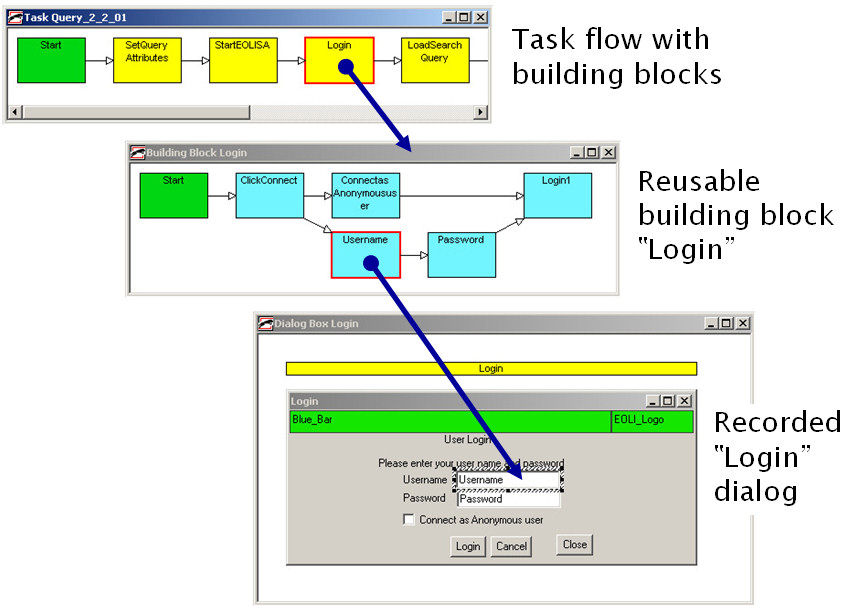

Model-based testing can be used for generating GUI test scripts, but also for producing scripts for embedded systems or even manual test instructions. The approach is especially useful for projects with many test repetitions, but will not pay off if there are only a few. Also, its efficiency depends on the re-usability of the model components. For instance, if the "Login" sequence is similar for most test cases, it can be defined as a re-usable building block. But if the test cases hardly contain any duplicate sequences, the re-usability will be low, thus increasing the effort for model creation and maintenance.

Description

After creating a test model for the SUT, test data and/or test sequences can be systematically derived from it.

Implementation

Modelling should start as early as possible in the project. Based on the requirements, a rough model can be created and then be refined step by step. Technical details (e.g., information about the affected GUI objects) are only added in the last stage. Particular attention has to be paid to the clearness of the test model and the re-usability of its components. The usage of parametrizable building blocks (sub-sequences) is highly encouraged.

The generation algorithm will search for valid paths through the model until the required coverage criteria are met. Apart from test sequences, some tools can also generate test data using systematic methods like equivalence partitioning or cause/effect analysis.

| Abstraction Levels of a Test Model |

Potential problems

In many projects, the requirements / design specifications do not have the level of detail

required for creating a model. Therefore, often a lot of effort has to be spent to gather the missing information. On the other hand, many weaknesses and inconsistencies in these documents are detected at an early stage just by creating the model.

Another issue of many test generators is that they only produce sequences of abstract keywords rather than executable scripts, e.g., for test execution tools. In order to avoid additional implementation and maintenance effort, it is important to choose a MBT tool that is able to produce complete scripts for the planned execution tool.

Issues addressed by this pattern

BRITTLE SCRIPTS

OBSCURE TESTS

SCRIPT CREEP

Experiences

Stefan Mohacsi:

We have been developing a tool for model-based testing since 1997 and applied it in numerous projects, e.g., at the European Space Agency (see chapter 9 of "Experiences of Test Automation"). In all these years, we learned many important lessons:

- Decide for which test cases model-based design shall be used. Typical candidates are tests for requirements that can easily be formalized as a model. On the other hand, for some requirements MBT might not make sense.

- Avoid reusing a model that has also been used for code generation. If the code and the tests are generated from the same model, no deviations will be found! Try to create a specific model from the testing perspective instead. In our tool TEMPPO Designer (formerly called IDATG), we tried to invent a simple notation that is focused on testing and easy to learn.

- Creating a test model is a challenging task that requires many different skills (test design, abstraction, understanding of requirements, technical background). Don’t expect end users to perform this task but employ specialists instead!

- Start modeling at an abstract level, then add more detail step by step.

- Pay attention to the reusability and maintainability of the model components, e.g., use parametrizable building blocks.

- Make sure that requirements engineers and software designers are available to clarify any questions from the test designers.

- Try to cover both test data and test sequence-related aspects in your model (e.g., define equivalence partitions for test data, activity diagrams for sequences). Finally, connect the two aspects by specifying which data is used in which step of the sequence. At first, TEMPPO Designer only supported sequence generation, data generation was added in 2006.

- Combine different test methods to increase the defect detection potential. Use random generation in addition to systematic methods.

- Choose a tool that can generate executable test scripts, not just keywords. Nowadays, TEMPPO Designer can produce complete scripts for HP QuickTest and TestPartner, but also for testing embedded devices.

- The effort invested into a well-structured model design will pay off eventually (in our ESA project, the ROI was reached after 4 test repetitions). If designed properly, only some parts of the model have to be adapted. Afterwards, all test cases can be updated automatically by re-generating them.

More tips can be found in the whitepaper "How to Make the Most of Model-based Testing]".

See also: Homepage of the MBT tool Atos TEMPPO Designer]

Bas Dijkstra:

I have applied this pattern successfully at a recent project, where I was asked to implement a test automation suite for testing a web service that validated large fixed length messages. There were around 20 types of these messages, and each consisted of 100+ fields with various syntax constraints (text/number, length, optional vs. mandatory, etc.). In addition to that, there were semantical constraints as well (field value needs to be one of a list of predefined values, field A cannot be empty if field B is empty, etc.).

All of these specifications were stored in an Excel worksheet (consider this our 'model'). I wrote a module that executed the following steps for all fields in all messages:

- Read specifications for a certain field in a certain message

- Generate negative test cases based on these specifications (leave a mandatory field empty, fill a numeric field with an alphanumeric value, generate values that are not in a predefined list, violate simple business rules, etc.)

- Generate a message (based on a message template) that is correct, except for the field under test for that test case

- Validate the message using the validation service (our SUT) and see whether the error code responding to the field that has been corrupted is returned

Using this approach I have been able to design and execute thousands of test cases within a couple of days, all directly from specifications (our 'model'). Lessons learned:

- In this particular example, it was easiest to focus on the negative test cases. These were both the most important for the test (as the SUT was a validation service) and the easiest to generate automatically from specifications. Your mileage may vary though, I can imagine that in other MBT projects, it will be easier to focus on positive test cases / happy flows.

- Start simple. Don't waste effort to automatically generate test cases for the most complex business rules, as it's likely to be easier to test these by hand. Generate the easiest test cases first and see how far you can go until the investment stops being outweighed by the benefits. The Pareto principle] most definitely applies here.

If you have also used this pattern and would like to contribute your experience to the wiki, please go to Experiences to submit your experience or comment.